- Arbitration

- Banking & Finance

- Capital Markets

- Commercial

- Competition

- Construction & Infrastructure

- Corporate / Mergers & Acquisitions

- Corporate Services

- Corporate Structuring

- Digital & Data

- Dispute Resolution

- Employment & Incentives

- Family Business & Private Wealth

- Innovation, Patents & Industrial Property (3IP)

- Insurance

Find a Lawyer

Book an appointment with us, or search the directory to find the right lawyer for you directly through the app.

Find out more

The Technology Issue

Decoding the future of law

This Technology Issue explores how digital transformation is reshaping legal frameworks across the region. From AI and data governance to IP, cybersecurity, and sector-specific innovation, our lawyers examine the fast-evolving regulatory landscape and its impact on businesses today.

Introduced by David Yates, Partner and Head of Technology, this edition offers concise insights to help you navigate an increasingly digital era.

2025 is set to be a game-changer for the MENA region, with legal and regulatory shifts from 2024 continuing to reshape its economic landscape. Saudi Arabia, the UAE, Egypt, Iraq, Qatar, and Bahrain are all implementing groundbreaking reforms in sustainable financing, investment laws, labor regulations, and dispute resolution. As the region positions itself for deeper global integration, businesses must adapt to a rapidly evolving legal environment.

Our Eyes on 2025 publication provides essential insights and practical guidance on the key legal updates shaping the year ahead—equipping you with the knowledge to stay ahead in this dynamic market.

The leading law firm in the Middle East & North Africa region.

A complete spectrum of legal services across jurisdictions in the Middle East & North Africa.

-

Practices

- All Practices

- Banking & Finance

- Capital Markets

- Commercial

- Competition

- Construction & Infrastructure

- Corporate / Mergers & Acquisitions

- Corporate Services

- Corporate Structuring

-

Sectors

-

Country Groups

-

Client Solutions

Today's news and tomorrow's trends from around the region.

17 offices across the Middle East & North Africa.

Our Services

Back

Back

-

Practices

- All Practices

- Banking & Finance

- Capital Markets

- Commercial

- Competition

- Construction & Infrastructure

- Corporate / Mergers & Acquisitions

- Corporate Services

- Corporate Structuring

- Digital & Data

- Dispute Resolution

- Employment & Incentives

- Family Business & Private Wealth

- Innovation, Patents & Industrial Property (3IP)

- Insurance

- Intellectual Property

- Legislative Drafting

- Private Client Services

- Private Equity

- Private Notary

- Projects

- Real Estate

- Regulatory

- Tax

- Turnaround, Restructuring & Insolvency

- Compliance, Investigations and White-Collar Crime

-

Sectors

-

Country Groups

-

Client Solutions

When AI Goes Wrong: A Guide to the Emerging Liability Landscape

7 min 37 sec

December 23, 2025 (Edited)

7 min 37 sec

December 23, 2025 (Edited)

Artificial intelligence (AI) has quickly moved from experimental systems to tools that influence decisions across everyday life and critical infrastructure. AI is increasingly embedded in processes once handled exclusively by humans. It is used for everything from medical assessments and loan approvals to customer-service chatbots and self-driving technology. As AI becomes embedded in critical processes, the risks of flawed outputs, biased decision making, and unsafe automation are shifting from theoretical possibilities to practical realities now scrutinised by courts and regulators worldwide.

Courts and regulators are confronting these impacts in real time. Facial recognition has been tied to wrongful arrests, predictive models to disputed healthcare denials, and chatbots to misleading advice. Automated hiring tools face discrimination claims, while autonomous systems have been linked to serious injuries and deaths. Collectively, these cases are testing the limits of existing liability doctrines and prompting lawmakers to consider new legal rules.

Drawing on case law, regulatory developments, and academic research, this analysis looks into how tort and product liability theories are being challenged, how civil rights, antitrust, and data protection laws are applied in new ways, and how specialised liability regimes are beginning to take shape. High-profile disputes — including the wrongful death lawsuit against OpenAI and the negligent misrepresentation claim against Air Canada — illustrate how different jurisdictions are addressing AI-driven harms.

At the same time, proposals like the European Parliament’s strict liability framework for high-risk AI systems signal the move toward dedicated regimes. These developments highlight that AI carries not just technical implications but also legal ones, requiring courts, regulators, and counsel to engage with evolving questions of liability and accountability.

The US Approach

In the United States, AI-related harms are mostly pursued under existing doctrines of tort, consumer protection, civil rights, and antitrust law. Facial recognition cases illustrate risks, such as in Williams v. City of Detroit,[1] over a wrongful arrest based on a faulty AI match. The case led to a 2024 settlement requiring safeguards against sole reliance on such systems. In a similar case, Porcha Woodruff was wrongfully arrested by officers based on a faulty facial recognition match and subsequent witness identification made from a photo lineup array. This case highlights how unchecked reliance on AI can produce unlawful outcomes.[2]

In 2023, regulators intervened when the Federal Trade Commission (FTC) banned Rite Aid from using facial recognition for five years, citing repeated misidentifications and inadequate safeguards that harmed consumers, particularly women and people of colour.[3]

Algorithmic risk assessments in criminal justice raise additional challenges. In State v. Loomis (2016),[4] the Wisconsin Supreme Court allowed a proprietary tool to be used in sentencing, but warned of transparency and fairness concerns.

Together, these cases underline recurring themes, including reliability of outputs, human corroboration, and accountability when errors occur. These cases illustrate how US courts rely on existing principles rather than bespoke AI statutes. To understand how these traditional doctrines are evolving to address AI harms, courts and scholars are beginning to test new theories of liability.

Evolving Theories and Pathways of AI Liability

As courts continue to rely on traditional doctrines, they are also reinterpreting them to fit the distinctive risks of AI. The most active areas of experimentation include negligence, misrepresentation, and product liability.

Negligence and misrepresentation

Cases involving negligent design or misleading outputs include Moffatt v. Air Canada (2024),[5] where the airline was held liable after its chatbot gave false information about bereavement fares. The tribunal rejected the argument that the chatbot was a “separate entity”, confirming that companies remain responsible for their automated agents.

By contrast, the wrongful death lawsuit against OpenAI over an alleged ChatGPT-assisted suicide raises harder questions of causation, foreseeability, and duty of care, especially for emotionally responsive AI systems.[6]

Product liability

Scholars argue that product liability is well suited to high-risk AI, much as it evolved to address automobiles and pharmaceuticals. Treating AI as a “product” could impose strict liability for defects, creating stronger incentives for testing and safety assurance.

Recent cases, such as lawsuits involving Tesla’s Autopilot system, illustrate how courts may apply design-defect and failure-to-warn principles to semi-autonomous technologies. Challenges remain, however, in defining when AI constitutes a “product” rather than a service, and in determining what qualifies as a “defect” in adaptive learning systems.[7]

Civil rights and bias

Civil rights claims have gained traction, especially in facial recognition disputes. Plaintiffs have shown disparate impacts on minority communities, leading to damages and reforms, such as requiring corroboration of AI matches.

Regulatory enforcement

Agencies are becoming increasingly proactive. The FTC’s action against Rite Aid shows how existing consumer protection laws can restrict unsafe AI uses, even without new legislation.

Taken together, these legal and regulatory pathways show how common law jurisdictions are adapting existing frameworks to address AI harms.

Purpose-Built Statutory Regimes for AI

Some places, particularly the European Union and United Kingdom, are moving toward purpose-built statutory regimes.

European Union

The European Union has enacted the AI Act, which entered into force on August 1 2024. It establishes regulatory obligations for AI systems — particularly high-risk ones — though many requirements will be phased in over time. Meanwhile, the proposed AI Liability Directive (to harmonise civil liability for AI damage) has been withdrawn from the Commission’s 2025 work programme and is not currently advancing.[8]

United Kingdom

The UK emphasises data protection. In R (Bridges) v. Chief Constable of South Wales Police (2020),[9] the Court of Appeal found the use of live facial recognition is unlawful due to weak safeguards and poor data protection assessments, setting a precedent for strict oversight of impactful AI.

Canada

In Moffatt v. Air Canada, the airline was found liable for negligent misrepresentation after its website chatbot misled a traveler about bereavement fares. The tribunal rejected Air Canada’s argument that the bot was a “separate” entity. The tribunal confirmed corporate liability for chatbot outputs, establishing a precedent likely to influence other common law jurisdictions.

The GCC Perspective: Early Steps Toward AI Liability

In the Gulf Cooperation Council (GCC), comprehensive AI-specific liability frameworks have yet to emerge, but governments are adapting existing legal regimes to address AI-related risks.

The UAE

In the UAE, potential AI liability may arise under general laws such as tort, consumer protection, and civil transactions law, with data protection and health regulations providing additional safeguards where personal data or medical applications are involved.

Free zones such as the DIFC have introduced specific obligations for organisations deploying autonomous systems, requiring notice and adherence to principles of fairness and accountability. While no public lawsuits have yet tested these rules in an AI context, the framework offers a foundation for future disputes.

Saudi Arabia

Saudi Arabia has positioned AI as a central pillar of its Vision 2030, with the Saudi Data and AI Authority (SDAIA) overseeing strategy and compliance. The amended Saudi Personal Data Protection Law gives regulators tools to scrutinise AI processing of personal data. A proposed “AI Hub Law” would establish a dedicated framework for cross-border AI operations.

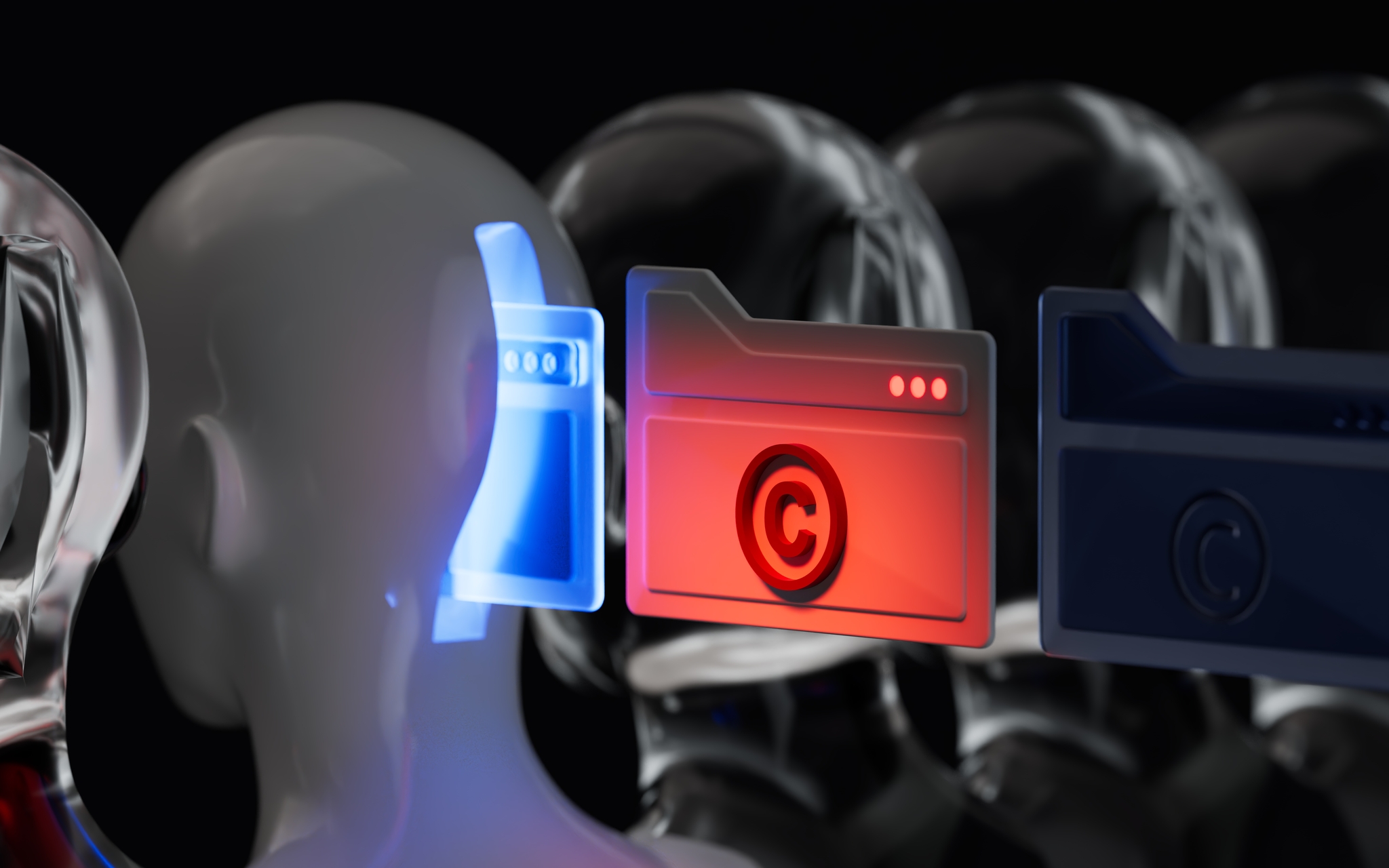

Enforcement is still limited, but a recent copyright fine in Saudi Arabia shows that authorities have begun applying existing laws to AI misuse. The first AI-related penalty of SAR 9,000 was issued for modifying and commercially exploiting another person’s photograph using AI without permission.[10]

Other Gulf jurisdictions

Other Gulf jurisdictions, such as Qatar and Bahrain, are taking a more sector-specific approach. Their regulators primarily rely on data protection, consumer safety, and healthcare frameworks to govern AI use. Ongoing regulatory initiatives suggest that this area will continue to develop rapidly across the region.

These developments show that GCC countries are gradually establishing the legal foundations for AI liability through a mix of data protection laws, sector-specific regulation, and evolving national strategies.

Practical Considerations for Practitioners

As AI regulation and case law continue to evolve, several themes have emerged to guide risk management.

- Reliance and causation: Link decisions to AI outputs while maintaining documented human oversight.

- Discovery: Seek focused disclosure of training data, validation, and error rates rather than broad model access.

- Policies and contracts: Establish policies limiting sole reliance on AI and include testing, reporting, and audit rights in vendor agreements.

- Competition risks: Monitor AI-driven pricing or decision tools to prevent anti-competitive behaviou

- Continuous awareness: Track new cases and regulatory updates across jurisdictions.

Navigating the Legal Future of AI

AI presents both opportunities and liabilities. Courts and regulators are only beginning to define accountability, but established doctrines and emerging regimes already offer guidance. As these frameworks evolve, the central challenge will be ensuring that technological progress develops within clear and trustworthy legal boundaries.

[1] Williams v. City of Detroit, Settlement Agreement (2024).

[2] Porcha Woodruff case — referenced in New York Times, “A.I. and the Wrongful Arrest of Porcha Woodruff” (2023).

[3] Federal Trade Commission, In the Matter of Rite Aid Corp., Docket No. C-4792 (2023).

[4] State v. Loomis, 881 N.W.2d 749 (Wis. 2016).

[5] Moffatt v. Air Canada, 2024 BCCRT 149 (Civil Resolution Tribunal, British Columbia).

[6] Raine v. OpenAI (2025) – pending.

[7] European Commission, Liability Rules for Artificial Intelligence, “Doing Business in the EU – Digital Contracts,” (2024), https://commission.europa.eu/business-economy-euro/doing-business-eu/contract-rules/digital-contracts/liability-rules-artificial-intelligence_en.

[8] “European Commission withdraws AI Liability Directive from consideration,” IAPP (2025).

[9] R (Bridges) v. Chief Constable of South Wales Police [2020] EWCA Civ 1058.

[10] Lexis Middle East, “Saudi Arabia: Fines Man in Landmark AI Copyright Case”, 14 September 2025 (reporting Gulf News, 14 September 2025)

Written by

Next Article

Written by